Computation & Collective Lab

I lead the Computation & Collective Lab (C&C Lab) at the Human-Computer Interaction Institute, Carnegie Mellon University. C&C Lab aims to explore how machine intelligence can aid human cooperation and coordination in hybrid systems of humans and machines. We also strive to expand the boundaries of computation social science and social computing by unlocking the potential of computational social experiments.

Machine Intelligence as Social Catalyst

Cooperation is challenging. People may be tempted to prioritize their own interests, which can hinder collective benefits and undermine group efforts. This problem of collective action is widespread in various societal issues, such as traffic congestion, public health problems, and climate change. Even when individuals wish to act for their group, they might not know how to do so when faced with social dilemmas where individual and group benefits are not aligned.

At C&C Lab, we believe that machine intelligence can help address these challenges in collective action. However, it also can lead to unintended negative consequences if not designed carefully considering human sociality. Utilizing the power of machine intelligence for public goods requires a different approach than the one intended for individual usability and convenience. That’s why C&C Lab examines how social interactions and technology can intersect to promote the emergence of social norms and how this process is related to collective action and the resolution of social dilemmas.

To examine the social effects of machine intelligence, we introduce artificial agents, or “bots,” into social network experiments, allowing human subjects to interact with them. For example, we perform experiments in which groups of humans interact with bots to address the partial optimization problem of social coordination. The study has shown that counterintuitively and even paradoxically, bots acting with small levels of random noise significantly improved the collective performance of human groups when placed at the network center.

Color coordination game with bots: This game’s goal is for every player in a network to select a color different from all of their neighbors’ as quickly as possible. The left group is composed entirely of human players. The right group includes three “noisy” nots (depicted in squares). Red connections indicate when the colors conflict. Quicker solutions to the color conflict reflect greater coordination capability of the group.

Using this bot-injection approach, we examine how human cooperation can improve when bots intervene in the partner selections made by humans, reshaping social connections locally within a larger group. In another study, we also show that even bots doing nothing (“stingy bots”) can balance structural power and improve collective welfare in resource sharing, although they do not bestow any wealth on people. Our findings highlight the need to incorporate social network mechanisms in designing machine behavior for the welfare of human collectives.

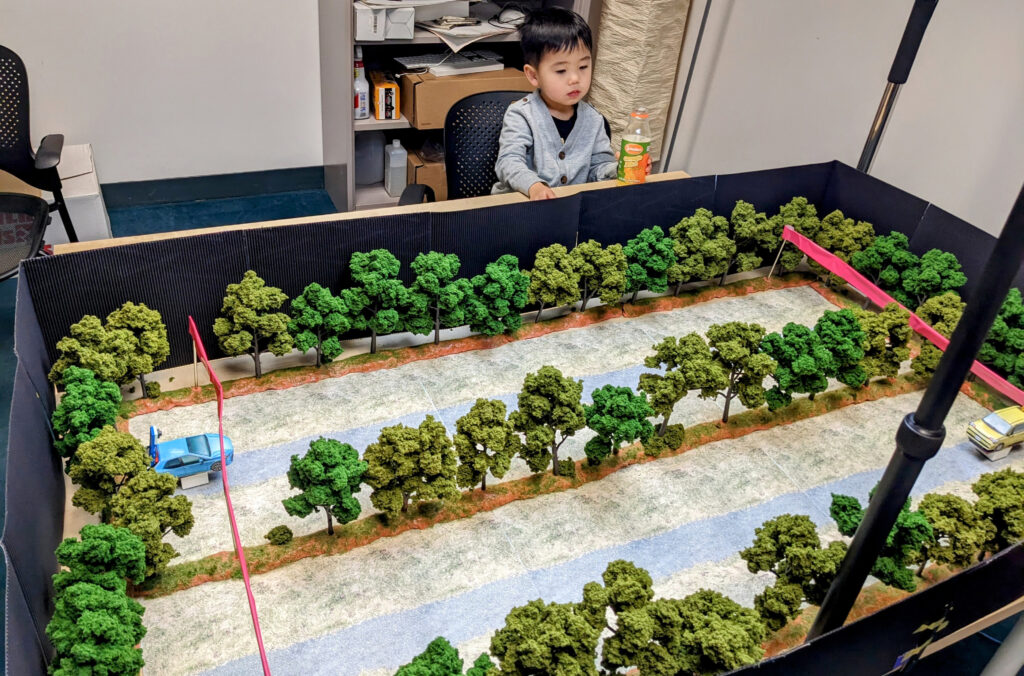

Our research agenda is expanding to include specific social and cultural contexts about human coordination and cooperation. For example, the C&C Lab studies cyber-physical coordination, where humans and machines attempt to coordinate their actions in physical spaces. Using a novel cyber-physical lab experiment (see below), we have provided the first insight that autonomous safety systems in cars can undermine the conventional norms of reciprocity among people. Based on these findings, we are exploring ways to use machine intelligence to mitigate the negative effects on human sociality and restore social harmony.

Chicken game with autonomous assistance: Online participants remotely drive “cars” with an onboard camera view on a single road leading from a start grid straight to a goal area in the opposite direction. They often evoke the norm of reciprocity to take turns giving way in this kind of situation. But when they drive cars equipped with auto-steering assistance that automatically avoids collisions at the last moment, they leave their decisions to machines, focusing on only self-interests.

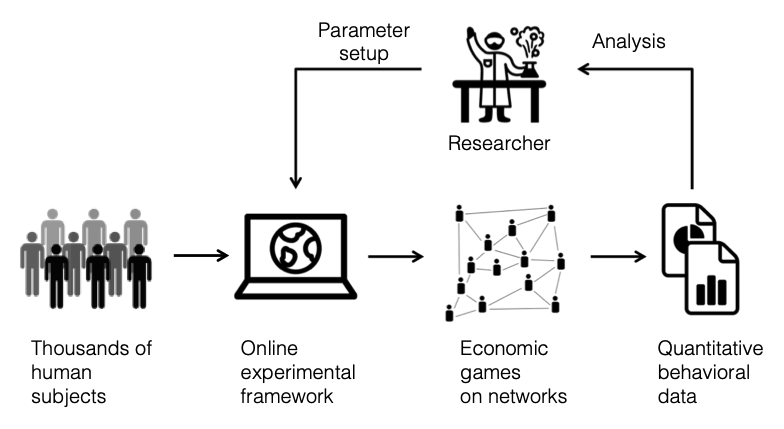

Computational social experiments with humans

C&C Lab is committed to advancing experimental methodology in computational social science, focusing on theoretical contributions and practical insights. The Internet provides a wealth of opportunities, including the ability to quickly recruit large samples and replicate experimental treatments. By leveraging these online settings, we have conducted various social experiments that explore emergency evacuation, resource sharing, and driving coordination.

For example, we have conducted experiments examining emergency evacuation dynamics. In these experiments, we introduced a novel decision-making game that included interpersonal communication, simulating an unpredictable situation caused by a disaster. This game has replicated numerous network phenomena observed in real-life circumstances, including the spread of false rumors, network disintegration, and the emergence of panic. We have observed up to 60 participants playing the game simultaneously to examine the macro outcomes that emerge from micro-interaction.

During the gameplay, human players spontaneously generated the diffusion of false information, which could translate to life-threatening danger in real-world disaster scenarios. Our follow-up studies have also shown that people can overcome challenges in social networks as they experience disasters and choose their partners in response to them. Through experimental manipulation, we have clarified that the network’s learning facilitation results from integrating individual experiences into structural changes.

Evacuation game: during the game, subjects need to decide whether to evacuate from a “disaster” that may or may not strike them. To find out the truth, subjects who have not yet evacuated can communicate with neighbors by sending “Safe” signals (turning their nodes blue) and “Danger” signals (turning their nodes red). Since a randomly selected subject (the “informant” shown as “i” in the video) is informed in advance whether a disaster will indeed strike or not, subjects can choose a correct action by disseminating the accurate information originating from the informant. In the experimental session shown in the video, a disaster will strike in 75 seconds. Subjects who have evacuated are shown with bold nodes.

We continue to take on the challenge of unleashing the potential of experiments to examine casualties in complex and entangled social phenomena. To study the effect of intelligence assistance on driving coordination, for example, we have developed a novel cyber-physical lab experiment that involves physically instantiated robotic vehicles remotely controlled by online participants. This model system offers advantages over having people interact solely in a virtual environment – by providing a physical instantiation of the challenges faced and by enhancing the verisimilitude of the collective action dilemmas.

Cyber-physical lab experiment: This experimental system involves palm-sized robots remotely controlled by online participants (that you cannot see in this picture). It takes advantage of online experiments (virtual lab) with the realization of theoretical concepts in the physical world (physical lab).